I'm always hearing of organisations that are looking to embark on the journey of decomposing their monolith into microservices.

This post explores near real-time data migration challenges from monolithic to microservices architecture.

Several strategies, such as shared databases, APIs, and event-driven approaches, are explored, including a real-world example using change data capture (CDC) and event-driven architecture.

Why?

Many factors influence the decision to break down a monolith into microservices.

While moving to microservices comes with its challenges. The examples listed below are common to a monolith architecture in the average company, but a change of architecture alone won't solve them all.

Velocity

As a monolith grows, the complexity of the moving parts increases the cognitive load on the engineers, increasing the time to realise value for the end customers.

One team adds 100 new tests that slow the pipeline by 1 minute, or someone breaks the build. It impacts everyone and causes some real problems.

Organisational

When a company reaches a specific size, it's not uncommon to have multiple squads that own their particular areas (onboarding squad, checkout squad, etc.).

With a monolith, as the number of people working on it increases, the risk of stepping on each other toes rises. You start to see more merge conflicts, a need for clear ownership (Who should update this?), and security concerns (Am I the right person to change this?).

Performance & Cost

As a monolith application grows, its resource demand tends to increase, another scheduled job here, another high throughput API there. When performance issues arise, workarounds become common by throwing more compute resources or a larger db at the problem.

Technical Debt

While no architecture offers a silver bullet in this space, monoliths can be very challenging to resolve technical debt, and it's not uncommon for a monolith to have business logic tangled across multiple domain boundaries. Each change comes with the risk of a large blast radius, which could impact millions of lines of code, and refactoring is frequently a long and slow process.

Aging Techonolgoy

Monoliths tend to have many years of tenure. The tech stack that was modern at the time is now considered old and out of fashion. Dependencies are long out of support, attracting talent is becoming more challenging and adding new functionality is often slow and risky.

Green Light

The key stakeholders are convinced and have given the green light to proceed, and now the difficult work starts. The How...

Each monolith to microservices journey is different. You may already have a mature golden path for building microservices, or you can pause new feature development. There are so many factors to consider for your journey.

Two things are for sure: we want this migration to succeed (how will you define and measure?), and we want it timely, e.g., sooner rather than later.

Today, we will focus on one of these challenges: dealing with the data currently residing within a monolithic application.

The Data

Data comes in many shapes and sizes, and we have many options regarding access and storage.

We will focus on relational SQL databases (MySQL, Postgres, MSSQL, etc.), as these are some of the most common data storage used in monolith applications in my experience.

We will look at approaches to allow our microservices to utilise this data.

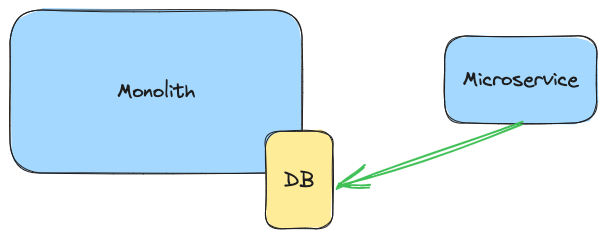

Shared Database

One approach is to use the same database as the monolith, and I can already sense some ears burning at the thought of this (database per service comes to mind).

One upside of this approach is that we can defer data migrations and focus on extracting business logic.

I have seen this approach work well in the short term when a team is unfamiliar with what data truly represents their domain model or domains yet to be extracted.

With modern database platforms, you can limit what data is accessible and database operations performed, ensuring guardrails are in place.

Drawback

This approach has some downsides that come to mind:

- Schema evolution needs to take place in multiple locations.

- Risk the database becoming/continuing to be a performance bottleneck or single point of failure.

- The data model is not optimised for its usage by each service.

- Easy access to other domain data can easily result in a distributed monolith due to the coupling of the data.

Summary

This approach is a stepping stone, and it can help to form a clearer understanding of a domain and provide quick access to data that has yet to migrate.

But you need to ensure good access controls and that this is a stage of migration, not the target end state.

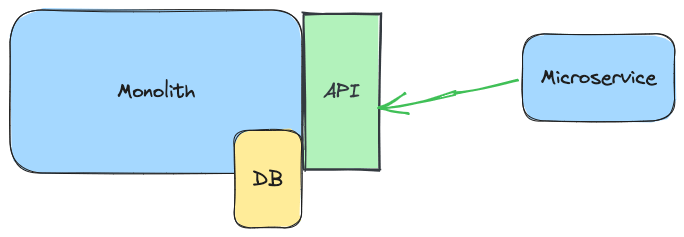

API

Rather than directly accessing the monolith database, we can expose APIs in the monolith, allowing access to the required data.

Doing so allows us to provide a clear contract while hiding the internals of the database. These APIs can be migrated directly to microservices in the future.

A common deciding factor for using an API approach is when data consistency is crucial. For example, the customer's latest account balance is checked and reserved before allowing a payment.

Drawback

- Building these APIs would require careful design and build within the monolith.

- Ensuring the correct security measure is in place, are these APIs publicly accessible, credentials, etc.

- It can be challenging to migrate these APIs away from the monolith in the future.

- Additional traffic and overhead within the monolith.

- Availability and scalability are coupled with the monolith.

Summary

This approach works well when the monolith release cycle does not become a bottleneck. If you consider the future state of the domain and apply this to the APIs, these can form the foundation of future microservice APIs.

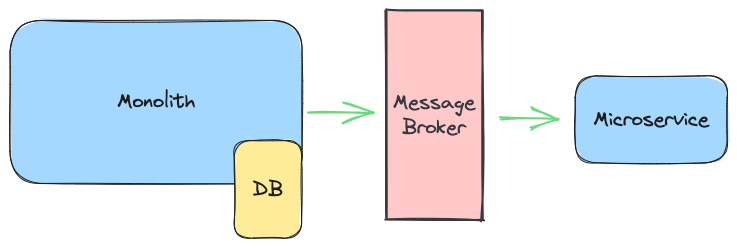

Events

We can look at an asynchronous approach to solving the data problem by emitting events using a message broker.

Message Broker

We can integrate the monolith to publish events directly to a message broker when things of interest occur.

In doing so, the monolith will proactively inform the platform when something interesting has happened.

With the monolith emitting the event directly to the message broker, no additional infrastructure is required compared to other approaches.

Drawback

- Monolith may need additional dependencies to support the target message broker.

- The approach is eventually consistent, which is only sometimes suitable.

- Additional overhead of publishing events from within the monolith.

- A risk of missing event triggers due to multiple code paths that can update the domain.

- Additional failure mode (What if the event fails to publish?).

- Additional work is required to sync all existing data.

- A deployment of the Monolith application is required.

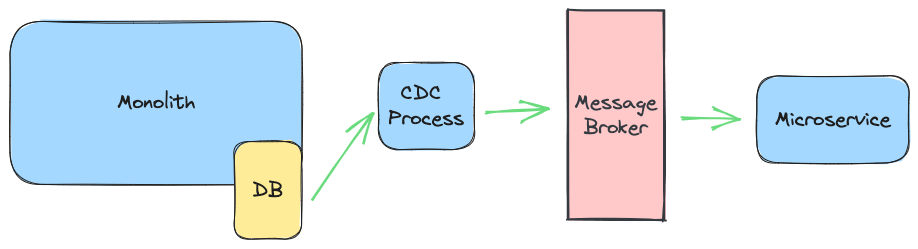

CDC

We can use CDC (change data capture) to inform us of all changes made within the monolith database.

Using a CDC approach allows us to reduce coupling between the monolith and microservices, as neither system needs to be directly aware of each other.

The CDC process emits change of a given table, and you have a few options which can be mixed and matched depending on your requirements.

Existing Tables

The first approach is to emit changes from the table as is, e.g. any changes made in the customer table are sent using the same structure.

insert into customer(id, name) values(1, 'Alexandra')

A change capture process would allow us to emit an event that looked like this:

{

"before": null,

"after": {

"id": "1",

"name": "Alexandra"

},

...

}

Outbox

For more complex data, you can format a specific payload and add it to a particular table, e.g., ' outbox`.

One way is to join tables together and save their outcome.

insert into outbox(message) values('{"name": "Alexandra", "address": { "postcode": "A postcode"} }')

A change capture process would allow us to emit an event that looked like this:

{

"before": null,

"after": {

"message": {

"name": "Alexandra",

"address": {

"postcode": "A postcode"

}

}

},

...

}

Drawback

- The CDC output often needs additional enrichment, which can add complexity.

- The approach is eventually consistent, which is only sometimes suitable.

- The architecture can be more complex due to more moving parts.

- The CDC process can become a critical failure point in the infrastructure.

- You need to configure the database to support CDC; not all hosted providers support this.

- The emitted changes are tightly coupled with the monolith database schema unless using an outbox table.

Summary

Publishing events representing the domain allows a clean approach for decoupled data sharing between the monolith and your microservices.

If done well, the emitting of these domain events will, over time, be migrated away from the monolith, with the relevant microservices taking over ownership of emitting these events.

Past Project

One project I've worked on required us to solve the problem of accessing the data within the monolith.

We had several constraints and considerations:

- We needed to avoid additional resource pressure on the monolith.

- Over the next six months, we would increase the total squad count from 1 to 6.

- We had three months to execute and deliver a production-ready solution.

Requirements

The business was moving to a new CRM, and when we had new customer sign-ups or profile updates, this data needed to be in the CRM in near real-time for the customer support agents.

The CRM didn't support a pull model (e.g. it couldn't fetch data from our platform).

Approach

With these constraints and requirements, we opted for an event-driven approach using CDC.

Step 1. Monolith

Our monolith application required no changes, and was unaware of any of the new components.

The database only needed minor configuration updates, such as the bin retention period.

Step 2. DMS

We needed a tool to perform the CDC process, and two good options stood out.

Debezium and AWS Database Migration Service (AWS DMS) we felt that Debezium was the more feature-rich offering and would provide a lot of flexibility, however, we ended up settling on using AWS DMS.

Our primary reason was that we were a small team with limited time and resources. We needed to keep operational overheads as low as possible. AWS DMS is a fully managed offering, allowing us to set up a working solution with minimal effort.

We used Terraform to manage the DMS infrastructure and replication task.

Step 3. Kafka

Kafka was the planned backbone of the microservice estate, and we were using the Amazon Managed Streaming for Apache Kafka (AWS MSK).

DMS has out-the-box support for Kafka as a target destination, Terraform managed these resources.

Step 4. CDC Enrichment Microservice

This microservice was responsible for reading the raw CDC events from Kafka and shaping them into domain events.

We used the Kafka Streams library to manipulate the CDC data into the desired format.

We had several different operations to apply to form the domain events we wanted, such as filtering, joining, restructuring and aggregation.

For many reasons, we did this in a new microservice rather than the monolith. A few noticeable ones are:

- The monolith had a slow release cadence, while the microservices were deployable in minutes and hours.

- We could achieve a higher throughput and lower latency with the microservice as it could more easily adapt to the traffic volume through our auto-scaling policies.

As the domain the microservices owned expanded, they took over ownership of emitting these domain events. It was transparent to other consumers, enabling a smooth transition from the monolith application.

Step 5. Back To Kafka

With the enriched CDC events that formed the target domain events, we would put these back into Kafka, and these domain events were now accessible by any microservice that wished to subscribe to them.

Step 6. CRM Processor Microservice

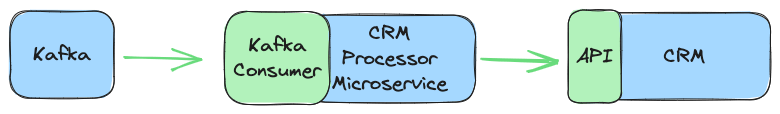

This service was responsible for taking the data from the domain event topics, in this case from the topic domain.namespace.customer and converting these into API requests that the CRM would accept.

The micro-service is only aware of the outcome of the data pipeline, and it uses a simple Kafka consumer to consume the domain topic.

Step 7. CRM

The CRM is the end sink for the data, a popular cloud-hosted CRM solution which exposes REST APIs.

Summary

The first five steps above were all reusable components forming part of our data pipeline from the monolith application.

It allowed us to move data out of the monolith in near real-time in a safe and controlled manner.

Adding new domain events was as simple as

- Configuring a small amount of terraform to register a DMS task for tables not already set up.

- Adding a Kafka Stream pipeline to shape the domain event.

And that was it. The domain events are now ready for anyone who needs it in the rest of the platform.

Less than a couple of hours of work and new events would be available in production with no changes required to the monolith application.

If I were to change anything with the implementation above, I would consider using Apache Flink rather than Kafka streams. When you need to support an architecture that doesn't revolve only around Kafka and needs to support a wide range of different data sources, streaming/batch data modes, and advanced data manipulation, Apache Flink is a very appealing solution.

We learned many lessons with running a data pipeline and loads of details I have skimmed over, but I will save that for a future post where we explore the developer experience.

Closing Thoughts

We have covered a few different approaches to unlocking the data that lives within the monolith.

While it's not uncommon to deploy any combination of the strategies mentioned today throughout the lifetime of a migration project, these strategies form only one part of the giant puzzle. Understanding the trade-offs and when to apply them within the context of your project is the hard part.

What are your thoughts? What approaches have you used? Do let us know!

Thank you for reading.